It was a cold autumn night, 10th of November 1619, when young René Descartes in his nightmares encountered an evil demon of deception for the very first time.

Human Integrity in Computed Realities

He will describe the beast years later in his First Meditation, as a being “of the utmost power . . . [who] has employed all his energies in order to deceive me” (Descartes 14). This demon wields a power to deceive us with our senses, through which humans perceive reality around them. That confrontation provoked Descartes to question the very existence of physical world. The only disciplines which he deemed certain were the ones that did not depend on physical examinations, such as arithmetic.

As technological advances progressed through the next four centuries, it became abundantly clear who could play the role of the demonic creature from Descartes’ nightmares. With the rapid developments in computer science, virtual reality became an experience anyone with a piece of cardboard and a mobile phone can try. The visuals might not be as stunning as the ones produced by our brains during the REM sleep phases, but let’s not forget that, as Elon Musk points out: “forty years ago we had Pong . . . two rectangles and a dot” (qtd. in Boult). And yet these current technical imperfections did not stop over 100 million people from spending their time “in some sort of virtual world” (Lastowka 24) back in 2009.

Whilst the concept of brains in jars is not particularly new, recent developments in supersymmetric physics and string theory move the idea of simulated worlds from Hollywood blockbusters like The Matrix and The Thirteenth Floor into the science labs. With everyone’s attention focused on the big question – “is our world real?” – we seem to be missing another important issue: as far as ethics goes, we are not prepared to deal with the consequences of computed realms.

This dilemma needs to be addressed urgently – as we stand today, most of our legal and ethical systems are based on traditional physical existence. Even though certain countries like South Korea do recognize crimes committed in virtual reality, most of their legislation deals with material losses as a consequence of online actions. For instance: a theft of virtual goods in an online game would be legally recognized as a felony because of their real-life value, i.e. time and money invested in the items.

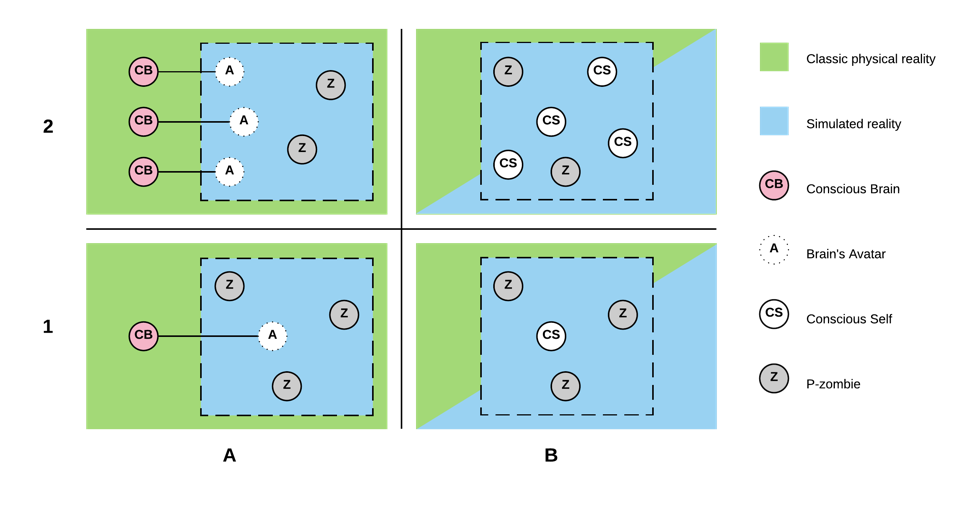

A growing number of scientists and inventors agree that if we do not live in virtual reality yet, we will end up in one someday. To illustrate the inevitability of this virtual revolution, I have put together a simple chart of possible outcomes of our galloping technical developments (see fig. 1). There are four scenarios, grouped by two factors.

The first factor defines a role of biological brain in consciousness and perception. If there is a hidden “human soul” embodied in the fabric of human brain, then the only possible way to experience conscious virtual reality is by intercepting communication trunks between the brain and central and peripheral nervous systems (option A). On the other hand, as the artificial neural networks become more robust and extensive, we might learn that it is possible to build and sustain a digital environment capable of housing sentient and conscious entities without the need for any biological vessels whatsoever (option B).

The vertical dimension describes the role of other characters we encounter in the simulation. It is possible that the only conscious being wandering around is us. Every other person we interact with is a philosophical zombie (p-z), designed and simulated along with the world (option 1). The concept of a zombie has been popularized in contemporary psychological thought experiments by David Chalmers, who describes them as “something physically identical to me, but which has no conscious experience” (96).

Despite this scenario’s counter-intuitive nature, it would be very pragmatic for simulations of advanced civilizations, as it would limit the size of universe that needs to be calculated to a small part experienced by a single participant. For undeveloped civilizations – like ours – it would make no difference computation-wise. The alternative is an online reality, where multiple conscious entities interact with each other’s avatars (option 2), similar to a multiplayer computer game. It is safe to assume that there would be p-zombie characters even in an online world – a computer powerful enough to simulate complex physical phenomena would have no issues with something such trivial as impersonating humans.

The purpose of my classification is to display simulations of worlds as something we are destined to experience. Currently, physicists collectively try to assess whether our universe is continuous or discrete. The latter would make it a natural candidate for almost effortless computation, given enough energy. But even if we are not denizens of virtual reality just yet, we do try our best to get there as quickly as possible. There are already movie theaters that stimulate all of our senses. We can send electrical signals directly into the brains of patients suffering from neurological disorders.

It is only a matter of time before these two worlds – video and neuroscience – collide, and when they do, there will be no going back. It might be adult entertainment industry or it might be the army, but someone will spend the funds needed to streamline the production of this ultimate VR device. Naturally, the consequences for our species will reach far beyond ethics and morality. In the process, we might find ourselves on the verge of extinction – or we might end all wars and human misery, but one fact remains: if we are not living in a simulation yet, very soon we will be.

This brings me to another popular misconception: living in virtual reality does not mean our conscious self is being simulated. David Chalmers describes being conscious as being able “to instantiate some phenomenal quality” (26) and possess “reportability or introspective accessibility of information” (26). Based on these definitions, I believe it is entirely plausible to retain free and self-aware will, while interacting with a world created by a machine. And since our will remains free, we keep full responsibility for our choices and actions.

In order to evaluate ethical behavior, we should look at the most common product of environments deprived of ethics: harm. The Oxford Dictionary of English defines harm as: “physical injury, . . . material damage, . . . ill effects or danger” (Oxford 801). We can break it down into parts and evaluate whether they apply in a simulated reality. Let’s start with physical injury.

There are three direct consequences of physical trauma: pain, physical handicap and possibly death. Pain is an electrochemical signal processed by a brain, and as such can be easily simulated (option A: directly through the nervous system interface, option B: by simulating physical stimuli). We already deal with scenarios where pain is artificially amplified (for instance: in a chronic disorder named fibromyalgia) or reduced (for instance: by taking drugs which inhibit pain receptors). Both physical handicap and death, defined as limited capability to interact with the simulated world – damaged senses, injured limbs, etc. – are functions of the environment, and as such will pose no difficulty for any simulator to mimic (both A and B).

Material damage component would be the easiest one to reproduce, since it does not affect the mental self in any way. Finally, ill effects and danger – or in other words: emotional trauma – is a function a conscious self, which in our case is not simulated (even though the world in which it resides might be), and therefore suffers from these factors directly.

Based on this simple analysis, I conclude that in a high quality simulation, harm would be indistinguishable from its non-simulated counterpart. If this is the case, how should we address the morality of causing harm and legal ramifications of virtual wrongdoing?

One aspect of simulated trauma has already crossed the realms of two worlds: psychological and emotional abuse. The first well documented case of online sexual violence dates back to 1993. It happened in a text-based computer game named LambdaMOO. Despite containing no graphic images, the events from one particular evening gained popularity thanks to a written testimony by Julian Dibbell. His book, My Tiny Life, still serves as a reference point for modern definition of “virtual crime”, detailing the actions of an abusive player who managed to take control of other people’s characters and had them involved in explicit sexual acts.

The incident happened in a simulated world, stripped off lights, colors and sounds. “No bodies touched. Whatever physical interaction occurred consisted of a mingling of electronic signals” (Dibbell 14). It was an unquestionably virtual event in a simulated world. And yet, it sparked actual emotions, in actual human beings. In the book, one of the victims recollects, how she “[found] herself in tears – a real-life fact that should suffice to prove that the words’ emotional content was no mere fiction” (Dibbell 16).

In response to the incident, online community described by Dibbell designed and implemented a law of self-regulation, which allowed its members to “vote out” abusive individuals and temporarily prevent them from accessing the game. Returning offenders would be handed a permanent ban – an irreversible termination of their simulated life.

It is difficult not to see a striking resemblance to western legislature and a capital punishment. Isn’t a death penalty a morbid analogy to being permanently banned from our world?

The issue we are unprepared to face is: how to respond to an act of virtual harm in a simulated reality, which has evolved to become a perfect manifestation of its real-life counterpart. Every time a new invention changes the way we live, it is usually followed by a set of legal constrains and conventions describing its proper use. Greg Lastowka uses an example of aviation in the early 20th century – it took a few decades for laws to emerge with respect to this new revolutionary form of travel (Lastowka 67).

I believe that the approach to harm in simulation needs to be handled according to a few variables. The most important one being – does the subject control the simulation? In “A”-type scenarios, our participation in the virtual reality might be voluntary. If that is the case, the concept is probably going to be safe by design, despite being fully capable of electrocuting connected biological brains.

As long as these principles are met: a participant voluntarily transfers his consciousness into a virtual world and no irreversible harm is done to his brain, and he remains in control of the simulation (or voluntarily waives it), then in my opinion he should bear full responsibility for his experience.

As far as option “B” is concerned, things are actually even simpler: assuming our reality is computed, the existing laws and moral foundations were already built from within the simulation. As a consequence, we shouldn’t have to change them on account of our universe’s origins.

The gap between options “1” and “2” is much more challenging. The difficulty comes from the fact we might not be able to distinguish p-zombies from sentient beings any time soon (just as it might take us some time to confirm the “B” theory). Since they – by definition – express all emotional characteristics of human beings, we will have to look past psychology in order to identify them (Chalmers 95). For all I know, I might be the only conscious being on the face of earth and I will never be able to tell if that is the case.

But if we somehow do manage to flag the NPCs in a game of life (an NPC is a non-playable character in a computer game), how do we go about interacting with them? Surely no moral or legal laws should regulate an interaction with a computer program, even when it looks, behaves and shows emotions of a human being.

In an uncontrolled simulation, persons flagged as p-z will effectively be stripped off all the civil rights. According to our current definition of “harm”, no action – no matter how cruel – against a p-z should call for punishment by society. If one day we do discover that the world around us is being simulated, a new digital witch hunt will begin. Hopefully by then we will have possessed the tools – and the will – to make this call with absolute certainty.

And this leaves us with the most probable scenario, which – I believe – is right around the corner. A controlled simulation with no intended way to harm another conscious being – A/1 according to my chart.

Despite appearing as the most innocent, this scenario could bring the most destructive outcome of them all – because of its addictive nature. Imagine a twisted realm where Freud’s Eros and Thanatos can finally meld into an uncontrolled spiral of depraved urges, with no consequences whatsoever for the actor. The same applies to adrenaline rushes with no fear of injury, psycho-stimulation with no hangover or the thrill of war with no risk of death wounds – to each according to their needs.

The appeal of this virtual world will be unmatched by anything a real-life has to offer. It will be a whole new reality, redefined. For a computer capable of neural brain stimulation, running a few p-zombie programs will be a walk in the park. Those who want a real relationship, family or friendship, will be able to find them with no effort. And by definition – they won’t be able to tell the difference. Deviants willing to exert their most aberrant fantasies will be able to do so with no fear of legal consequences.

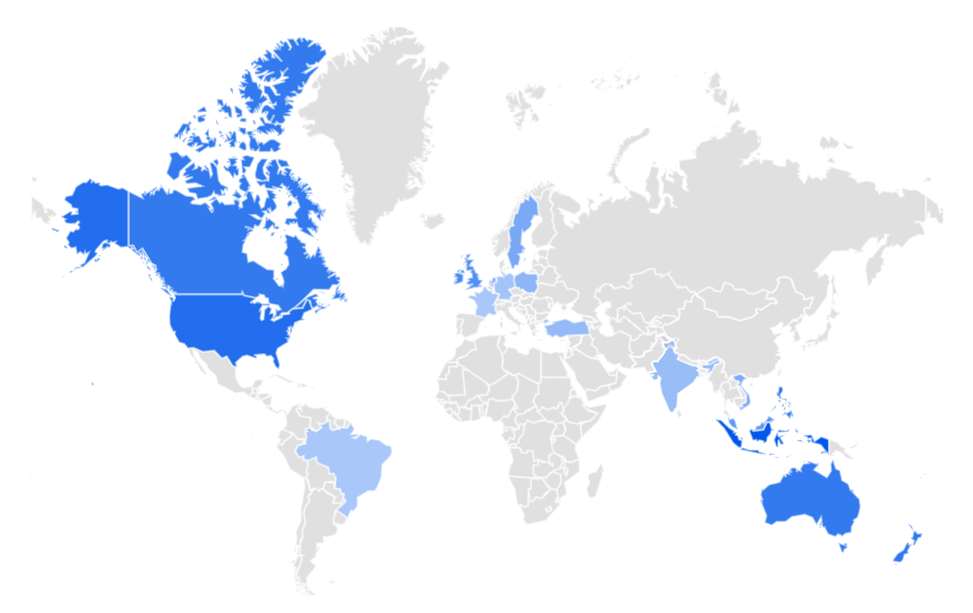

The uncomfortable truth is – our real-life has nothing on virtual reality, as long as one holds the reins to control it. Even today, lucid dreaming techniques – which is the closest experience to a controlled simulation one can get – are gaining popularity, especially in developed countries (see fig. 2).

With all the irresistible appeal, the lack of moral grounds in the VR worlds might and will affect us as a species. Let us put aside the fact, that with any imaginable achievement and reward available to everyone at their fingertips, the progress of our civilization might come to a standstill (as there will be no motivation to drive us forward). A much bigger risk is that this constant exposure to a world with no rules and pleasure with no sin will – without a doubt – affect our behavior outside the simulation. According to New York Times and subsequent findings by the Huffington Post, in more than half of killings committed by Iraq veterans, victims were family members or friends of the murderer (Sontag and Alvarez). These soldiers exposed to a reality of war for a relatively short period of time returned back home to find themselves incapable of re-adaptation to a non-violent environment.

Once we reach a stage where we can literally put – or grow – our brains in jars and live our lives in a computed reality, we will have robots to cater to our biological needs: brains need nutrition, computers need energy. Whether we decide to interact with each other or confine ourselves in secluded video stages filled with beautiful and friendly zombies, the risk of immoral conduct against the biological vessels remains a threat. And with our only conscious parts fully immobilized, it would bring disastrous consequences should an ill-willing agent gain power to damage our civilization (of jelly blobs), like a veteran suffering from PTSD who targets his family.

Our ethical grounds are currently shackled by religions, family values, cultural identities and educational institutions, all of which claim credit for making us moral beings. Unfortunately, all of them will cease to exist – at least in their current form – once virtual realms kick in. Unless we elevate righteousness and integrity of human kind to a new – more abstract – level, we might find ourselves unable to resist the destructive temptations of virtual words.

After all, rather than fighting the evil demon of deception, it is so much easier to just let go – “like a prisoner who is enjoying an imaginary freedom while asleep . . . he dreads being woken up, and goes along with the pleasant illusion as long as he can” (Descartes 14). If we face this new reality – virtual or not – unprepared, this might be a very short dream.

Works Cited

Boult, Adam. “We are ‘almost definitely’ living in a Matrix-style simulation, claims Elon Musk” The Telegraph Technology. The Telegraph, 3 Jun 2016. Web. 17 Oct 2016.

Chalmers, David J. The Conscious Mind: In Search of a Fundamental Theory. New York: Oxford University Press, 1996. Print.

Descartes, René. Meditations on First Philosophy with Selections from the Objections and Replies. Cambridge: Cambridge University Press, 1996. Print.

Dibbell, Julian. My Tiny Life: Crime and Passion in a Virtual World. New York: Henry Holt and Company, 1998. Print.

Lastowka, Greg. Virtual Justice: The New Laws of Online Worlds. London: Yale University Press, 2010. Print.

Oxford Dictionary of English. Oxford: Oxford University Press, 2010. Print.

Sontag, Deborah and Alvarez, Lizette. “Across America, Deadly Echoes of Foreign Battles” The New York Times U.S. The New York Times, 13 Jan 2008. Web. 13 Oct 2016.